Displaying Document(s) For : All Categories

White Paper

Last Updated: May 07, 2025

To help retail and restaurant businesses implement self-checkout, WINTEC has launched Intel® architecture-based smart retail and group meal self-checkout kiosks. Equipped with the 12th Gen Intel® Core™ processors and optimized with the Intel® Distribution of OpenVINO™ Toolkit, these terminals enable secure and efficient self-checkout with accelerated image recognition model inference.

Published By:

Tags:

OpenVINO™Artificial IntelligenceRetailSmart CitiesSmart Retail

Categories:

Array

Partner Brief

Last Updated: March 27, 2025

EPIC iO offers a comprehensive range of IoT, connectivity, and AI solutions that seamlessly integrate with your existing infrastructure. Our DeepInsights platform analyzes data from multiple sources, including cameras and IoT/OT sensors, to provide real-time insights and actionable intelligence.

Published By:

Tags:

Intel® Xeon® Scalable ProcessorsGovernmentRetailIntel® Core™ Processor

Categories:

Array

Partner Brief

Last Updated: March 27, 2025

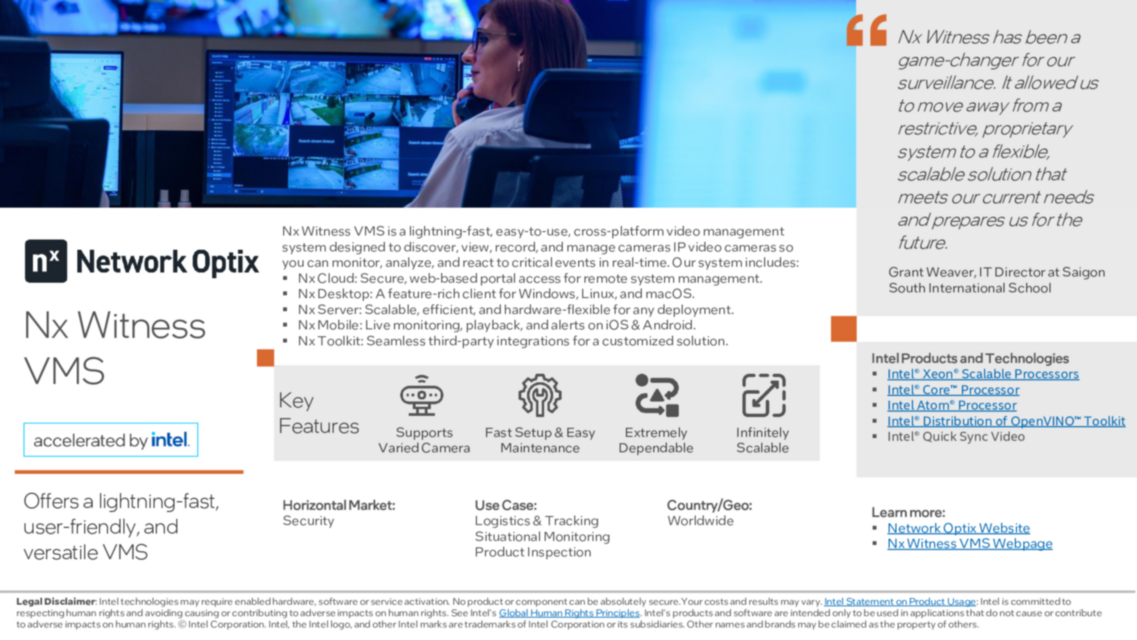

Nx Witness VMS is a lightning-fast, easy-to-use, cross-platform video management system designed to discover, view, record, and manage cameras IP video cameras so you can monitor, analyze, and react to critical events in real-time.

Published By:

Tags:

Intel® Xeon® Scalable ProcessorsOpenVINO™Intel® Core™ Processor

Categories:

Array

Partner Brief

Last Updated: March 27, 2025

With physical and cyber security threats on the rise, it’s more important than ever to level up your business security systems. Milestone XProtect video management software (VMS) connects your cameras, sensors, analytics, and sites in one easy-tomanage interface. You can customize a more future-proof, resilient, and data-safe security environment that lets you see and do more. Trusted in over 500,000 customer sites from small scale to multi-site critical infrastructure. Deploy on-premises or expand your cloud capabilities at your own pace with flexible pure and hybrid cloud deployment options.

Published By:

Tags:

Intel® Xeon® Scalable ProcessorsOpenVINO™GovernmentRetailIntel® Core™ Processor

Categories:

Array

White Paper

Last Updated: March 24, 2025

Large language models (LLMs) have enabled breakthroughs in natural language understanding, conversational AI, and diverse applications such as text generation and language translation. Additionally, large language models are massive, often over 100 billion parameters and growing. A recent study published in Scientific American1 by Lauren Leffer articulates the challenges with large AI models, including scaling to small devices, accessibility when disconnected from the internet, as well as power consumption and environmental concerns.

Published By:

Tags:

OpenVINO™ContainersArtificial Intelligence

Categories:

Array

White Paper

Last Updated: March 24, 2025

This document presents two methods for optimizing the YOLOv4 and YOLOv4-tiny models with customized classes and anchors using Intel® Distribution of OpenVINO™ Toolkit. The object detection use case based on the optimized models is used to verify the corresponding inference results. Furthermore, the decreased inference time of the optimized model is demonstrated with the real-time use case of an ISV.

Published By:

Tags:

Intel® Xeon® Scalable ProcessorsEthernet ProductsDPDKOpenVINO™ContainersCloud ComputingMachine LearningArtificial IntelligenceCryptographyNetwork EdgeData Analytics

Categories:

Array

White Paper

Last Updated: March 24, 2025

This guide is to help users install and run Ollama with Open WebUI on Intel Hardware Platform on Windows* 11 and Ubuntu* 22.04 LTS.

Published By:

Tags:

Intel® Xeon® Scalable ProcessorsOpenVINO™5GVirtualizationNetwork EdgeCybersecurityKubernetes

Categories:

Array

White Paper

Last Updated: March 24, 2025

This document presents the BKMs for optimizing and quantizing YOLOv7 model using Intel® Distribution of OpenVINO™ Toolkit. The object detection use case based on the optimized YOLOv7 model is evaluated on Intel platforms to demonstrate the improved throughput. Furthermore, the potential cost savings by the optimized model in cloud deployment is illustrated with the real-time use case of an ISV.

Published By:

Tags:

OpenVINO™5GContainers

Categories:

Array

White Paper

Last Updated: March 24, 2025

This document presents the BKM for reducing the mode load time by enabling model caching in Intel® Distribution of OpenVINO™ Toolkit. The following figure illustrates the steps involved in implementing the inference pipeline in the user application using OpenVINO™ Runtime API.

Published By:

Tags:

OpenVINO™5GContainers

Categories:

Array

White Paper

Last Updated: March 24, 2025

Large Language Models (LLMs) are deep learning algorithms that have gained significant attention in recent years due to their impressive performance in natural language processing (NLP) tasks. However, deploying LLM applications in production has a few challenges ranging from hardware-specific limitations, software toolkits to support LLMs, and software optimization on specific hardware platforms. In this whitepaper, we demonstrate how you can perform hardware platform-specific optimization to improve the inference speed of your LLaMA2 LLM model on the llama.

Published By:

Tags:

Intel® Xeon® Scalable ProcessorsOpenVINO™vRAN5GNetwork Edge

Categories:

Array

White Paper

Last Updated: March 24, 2025

The OpenVINO™-based C++ demos in Open Model Zoo are built using CMake that allows developers to build simple to complex software across multiple platforms with a single set of input files. In real business use cases, the OpenVINO™ applications are integrated into the end user’s target application and the build steps may vary depending on the build tool in the production setup. However, if the end users are using g++ compiler to build their applications, they do not want to change their build tool. To facilitate this, this paper presents the steps to build the C++ object detection demo using g++ compiler on Ubuntu 20.04.

Published By:

Tags:

Intel® Xeon® Scalable ProcessorsSecure Device OnboardOpenVINO™5GContainersCloud ComputingMachine LearningArtificial IntelligenceCryptographyNetwork EdgeKubernetesConfidential Computing

Categories:

Array

White Paper

Last Updated: March 24, 2025

With the increase of processing capability year over year as well as additional compute accelerators like the Neural Processing Unit (NPU), a single system is now capable of running multiple end-to-end workloads like QnA (Question and Answer), video surveillance analytics, and retail applications. Intel® Core™ Ultra Processors have up to 14 CPU cores with 20 threads as well as additional 2 low-power efficient cores that can be allocated to each of the workloads using containerization. The CPU is suitable for low latency workloads, the integrated GPU (iGPU) is for high throughput tasks, and the NPU is targeted for low power sustain workloads.

Published By:

Tags:

OpenVINO™uCPEContainersVideo Analytics

Categories:

Array

Showing -

of results