A library for benchmarking, developing and deploying deep learning anomaly detection algorithms

Key Features • Docs • Notebooks • License

🌟 Announcing v2.2.0 Release! 🌟

We’re thrilled to announce the release of Anomalib v2.2.0, packed with new datasets, metrics, and performance improvements! Some of the highlights are: New datasets

- 3D-ADAM : A comprehensive dataset for 3D anomaly detection in additive manufacturing.

- BMAD : Benchmarks for Medical Anomaly Detection, featuring six datasets across five medical domains

New metrics

- PGn and PBn (CVPR2025) : Presorted good/bad metrics for more insightful performance evaluation.

- Histogram visualization of anomaly scores for better interpretability.

Other Improvements

- Faster coreset selection for PatchCore model, resulting in ~30% quicker training.

- Reduced memory usage for memory bank–based models like PatchCore, PaDiM, and DfKDE.

- Many more code and documentation updates.

We value your input! Please share feedback via GitHub Issues or our Discussions

👋 Introduction

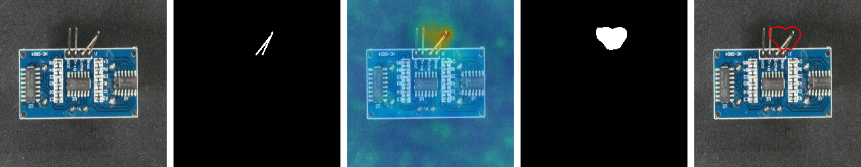

Anomalib is a deep learning library that aims to collect state-of-the-art anomaly detection algorithms for benchmarking on both public and private datasets. Anomalib provides several ready-to-use implementations of anomaly detection algorithms described in the recent literature, as well as a set of tools that facilitate the development and implementation of custom models. The library has a strong focus on visual anomaly detection, where the goal of the algorithm is to detect and/or localize anomalies within images or videos in a dataset. Anomalib is constantly updated with new algorithms and training/inference extensions, so keep checking!

Key features

- Simple and modular API and CLI for training, inference, benchmarking, and hyperparameter optimization.

- The largest public collection of ready-to-use deep learning anomaly detection algorithms and benchmark datasets.

- Lightning based model implementations to reduce boilerplate code and limit the implementation efforts to the bare essentials.

- The majority of models can be exported to OpenVINO Intermediate Representation (IR) for accelerated inference on Intel hardware.

- A set of inference tools for quick and easy deployment of the standard or custom anomaly detection models.

📦 Installation

Anomalib can be installed from PyPI. We recommend using a virtual environment and a modern package installer like uv or pip.

🚀 Quick Install

For a standard installation, you can use uv or pip. This will install the latest version of Anomalib with its core dependencies. PyTorch will be installed based on its default behavior, which usually works for CPU and standard CUDA setups.

# With uv

uv pip install anomalib

# Or with pip

pip install anomalib

For more control over the installation, such as specifying the PyTorch backend (e.g., XPU, CUDA and ROCm) or installing extra dependencies for specific models, see the advanced options below.

💡 Advanced Installation: Specify Hardware Backend

To ensure compatibility with your hardware, you can specify a backend during installation. This is the recommended approach for production environments and for hardware other than CPU or standard CUDA. **Using `uv`:**# CPU support (default, works on all platforms)

uv pip install "anomalib[cpu]"

# CUDA 12.4 support (Linux/Windows with NVIDIA GPU)

uv pip install "anomalib[cu124]"

# CUDA 12.1 support (Linux/Windows with NVIDIA GPU)

uv pip install "anomalib[cu121]"

# CUDA 11.8 support (Linux/Windows with NVIDIA GPU)

uv pip install "anomalib[cu118]"

# ROCm support (Linux with AMD GPU)

uv pip install "anomalib[rocm]"

# Intel XPU support (Linux with Intel GPU)

uv pip install "anomalib[xpu]"

pip install "anomalib[cu124]"

🧩 Advanced Installation: Additional Dependencies

Anomalib includes most dependencies by default. For specialized features, you may need additional optional dependencies. Remember to include your hardware-specific extra.# Example: Install with OpenVINO support and CUDA 12.4

uv pip install "anomalib[openvino,cu124]"

# Example: Install all optional dependencies for a CPU-only setup

uv pip install "anomalib[full,cpu]"

🔧 Advanced Installation: Install from Source

For contributing to `anomalib` or using a development version, you can install from source. **Using `uv`:** This is the recommended method for developers as it uses the project's lock file for reproducible environments.git clone https://github.com/open-edge-platform/anomalib.git

cd anomalib

# Create the virtual environment

uv venv

# Sync with the lockfile for a specific backend (e.g., CPU)

uv sync --extra cpu

# Or for a different backend like CUDA 12.4

uv sync --extra cu124

# To set up a full development environment

uv sync --extra dev --extra cpu

git clone https://github.com/open-edge-platform/anomalib.git

cd anomalib

# Install in editable mode with a specific backend

pip install -e ".[cpu]"

# Install with development dependencies

pip install -e ".[dev,cpu]"

🧠 Training

Anomalib supports both API and CLI-based training approaches:

🔌 Python API

from anomalib.data import MVTecAD

from anomalib.models import Patchcore

from anomalib.engine import Engine

# Initialize components

datamodule = MVTecAD()

model = Patchcore()

engine = Engine()

# Train the model

engine.fit(datamodule=datamodule, model=model)

⌨️ Command Line

# Train with default settings

anomalib train --model Patchcore --data anomalib.data.MVTecAD

# Train with custom category

anomalib train --model Patchcore --data anomalib.data.MVTecAD --data.category transistor

# Train with config file

anomalib train --config path/to/config.yaml

🤖 Inference

Anomalib provides multiple inference options including Torch, Lightning, Gradio, and OpenVINO. Here's how to get started:

🔌 Python API

# Load model and make predictions

predictions = engine.predict(

datamodule=datamodule,

model=model,

ckpt_path="path/to/checkpoint.ckpt",

)

⌨️ Command Line

# Basic prediction

anomalib predict --model anomalib.models.Patchcore \

--data anomalib.data.MVTecAD \

--ckpt_path path/to/model.ckpt

# Prediction with results

anomalib predict --model anomalib.models.Patchcore \

--data anomalib.data.MVTecAD \

--ckpt_path path/to/model.ckpt \

--return_predictions

📘 Note: For advanced inference options including Gradio and OpenVINO, check our Inference Documentation.

Training on Intel GPUs

[!Note] Currently, only single GPU training is supported on Intel GPUs. These commands were tested on Arc 750 and Arc 770.

Ensure that you have PyTorch with XPU support installed. For more information, please refer to the PyTorch XPU documentation

🔌 API

from anomalib.data import MVTecAD

from anomalib.engine import Engine, SingleXPUStrategy, XPUAccelerator

from anomalib.models import Stfpm

engine = Engine(

strategy=SingleXPUStrategy(),

accelerator=XPUAccelerator(),

)

engine.train(Stfpm(), datamodule=MVTecAD())

⌨️ CLI

anomalib train --model Padim --data MVTecAD --trainer.accelerator xpu --trainer.strategy xpu_single

⚙️ Hyperparameter Optimization

Anomalib supports hyperparameter optimization (HPO) using Weights & Biases and Comet.ml.

# Run HPO with Weights & Biases

anomalib hpo --backend WANDB --sweep_config tools/hpo/configs/wandb.yaml

📘 Note: For detailed HPO configuration, check our HPO Documentation.

🧪 Experiment Management

Track your experiments with popular logging platforms through PyTorch Lightning loggers:

- 📊 Weights & Biases

- 📈 Comet.ml

- 📉 TensorBoard

Enable logging in your config file to track:

- Hyperparameters

- Metrics

- Model graphs

- Test predictions

📘 Note: For logging setup, see our Logging Documentation.

📊 Benchmarking

Evaluate and compare model performance across different datasets:

# Run benchmarking with default configuration

anomalib benchmark --config tools/experimental/benchmarking/sample.yaml

💡 Tip: Check individual model performance in their respective README files:

✍️ Reference

If you find Anomalib useful in your research or work, please cite:

@inproceedings{akcay2022anomalib,

title={Anomalib: A deep learning library for anomaly detection},

author={Akcay, Samet and Ameln, Dick and Vaidya, Ashwin and Lakshmanan, Barath and Ahuja, Nilesh and Genc, Utku},

booktitle={2022 IEEE International Conference on Image Processing (ICIP)},

pages={1706--1710},

year={2022},

organization={IEEE}

}

👥 Contributing

We welcome contributions! Check out our Contributing Guide to get started.

Thank you to all our contributors!